Going Beyond Manual Experiments to Scale Materials Discovery

The glass ceiling of manual chemistry

Step into any chemistry lab, and you will encounter the typical clichés: scientists in white coats and protective googles, pipettes in hand, laser-focused on crafting new materials — one reaction at a time. This artisanal model has its merits; it is at the origin of our modern society. However, it cannot keep pace with today’s exponential demand for innovation, whether to match rising energy demands or mitigate climate change.

Why is that, you may ask? Several reasons:

- Time-to-discovery: Moving a single formulation from an idea to a validated product often takes more than a decade. It is a lengthy and challenging process, with numerous hurdles and unexpected delays.

- Talent bottleneck: Every step depends on PhD-level expertise, which takes years to acquire, making R&D hard to scale, especially outside of well-funded academic and corporate labs.

- Reproducibility gaps: Manual protocols vary from lab to lab, and experimental notebooks often omit critical metadata (such as lab humidity, stirring speed, and age/storage of stock solutions), resulting in poorly standardized and reproducible procedures.

In short, the traditional chemistry lab remains a powerful tool for creative exploration, but it is unequal to the scale, reliability, and speed requirements of today’s demand for innovation. For the breakthroughs in materials needed for clean energy, electronics, and climate tech, we must find a better way.

HTE: a step-change but not the endgame

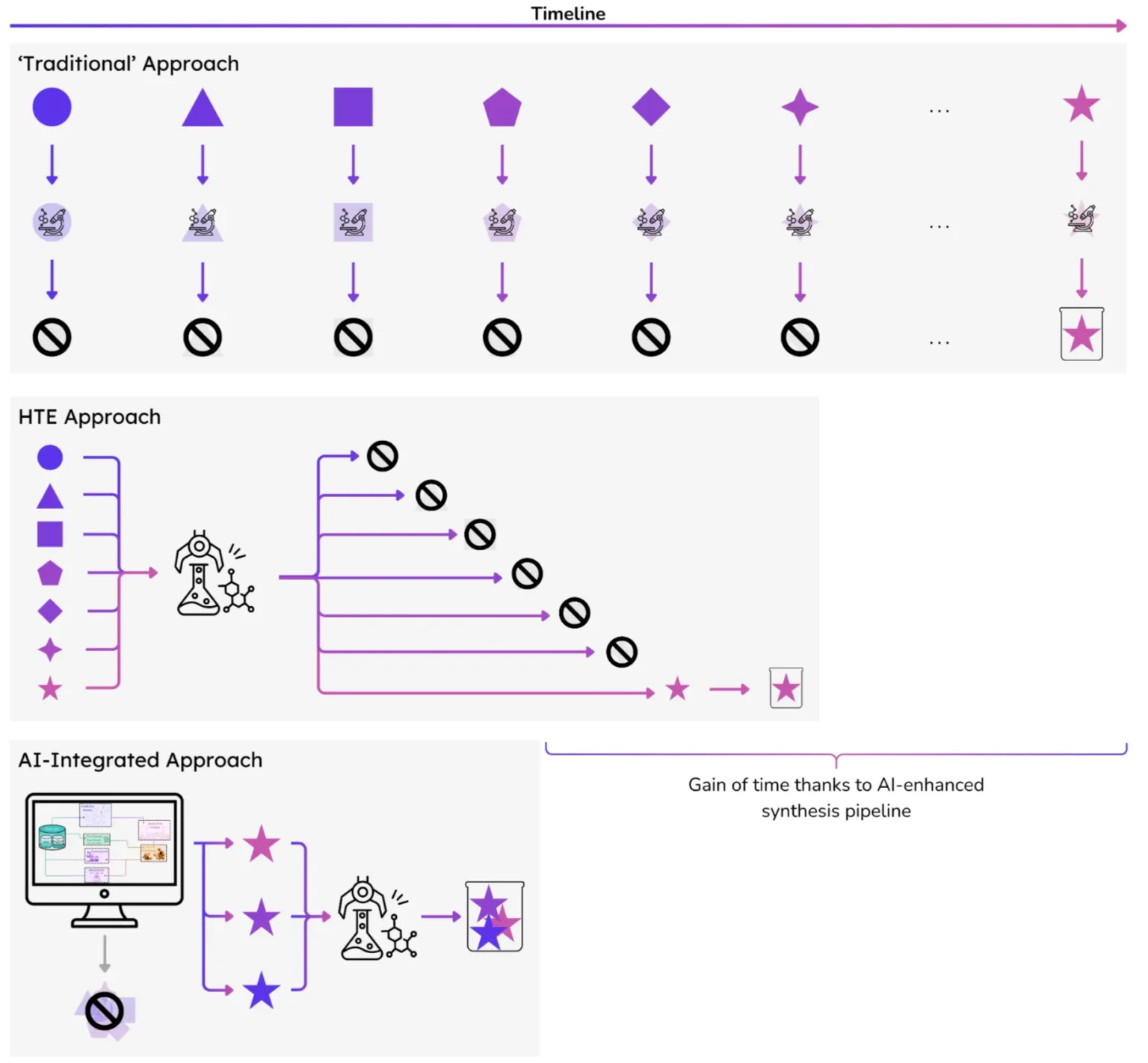

High-throughput Experiments (HTE), defined as the workflow of running dozens or hundreds of reactions in parallel using at least semi-automated robots, address part of the problems associated with manual synthesis.

On the one hand, hardware logs every detail of the experiments (e.g., volume of precursors added, sample pH, and temperature) into a structured data format rather than relying on lab notebook anecdotes, which fosters reproducibility. Without this, scientific progress is severely hindered due to the lack of systematic reproducibility of results and, therefore, prevents the development of a holistic understanding of chemical procedures [1].

Secondly, parallel workflows accelerate the pace of experiments by 1 or 2 orders of magnitude, even when compared to highly trained and motivated laboratory chemists. This allows labs to test significantly more samples! For instance, in the life-science field, where HTE has a longer history, Pfizer utilizes HTE to scale from 50 to 3,000 assays per week [2]. Initial successes in life sciences have spurred the development of HTE for catalysis, optoelectronics, and nanomaterials, utilizing glove-box robotics (for handling air-sensitive materials) and high-pressure reactors (to test reactions under extreme conditions) [3].

Due to the potential for massive increases in experimental data volume and data organization, HTE is often labeled as the future of experimental chemistry. This being said, three hard limits remain.

- Capital cost: HTE instruments, particularly specialized robotics for synthesis, characterization, and testing, remain a multi-million-dollar investment.

2. Throughput: While HTE allows for a greater number of experiments, the sheer size of the combinatorial space of possible materials (i.e., all the ways atoms can be arranged in 3D space) is so prohibitively vast that we are still far from being able to efficiently and systematically identify materials with desirable properties through wet lab experimentation alone.

3. Manual oversight: Human skills are still required for handling edge cases and guiding complex scenarios (we shall forget this one for now and come back to it later).

AI-Powered Discovery: From Bottleneck to Flywheel

So, if HTE are not enough, what can we do?

What if we could reverse the process? Instead of relying on trial-and-error experimentation to discover promising materials, what if we could design them backward, starting from a desired property and using AI to propose viable candidates?

This is the promise of inverse design, powered by recent advances in computational chemistry and machine learning. Over the past decade, high-throughput simulations like Density Functional Theory (DFT) have generated millions of quantum-level data points across open databases such as the Materials Project, OC20, and LeMaterial [4].

At Entalpic, we use these computational datasets— once used solely for understanding experiments— to train generative AI models (check our previous blogpost [5]) that create novel crystal structures using crystallographic principles, guided by predictive machine learning models [6] that estimate their stability, cost, and performance. This workflow enables us to explore the chemical space at an incredible scale: we can screen billions of candidates in silico and then surface the top 0.00001% for targeted experimental validation.

Importantly, AI doesn’t replace chemists; it empowers them. Instead of searching for some needles in a haystack, they focus their effort, skills, and intuition where it’s most likely to succeed. Furthermore, a paradigm shift is underway. HTE is not only a means to validate hypotheses; it has also become a method to generate data that continuously improves the accuracy of AI models. With every synthesis and test, our models get smarter and suggest more relevant material candidates, forming a virtuous cycle between computation, learning, and the lab.

Figure 1. Simplified schematic of the role of AI in HTE

It’s all about empowering chemists

Autonomy is a spectrum, not a switch. Fully autonomous labs, where every step of the materials development process, from synthesis and characterization to testing, is done robotically and without human intervention, still require a significant amount of additional instrumentation and software development to be commercially viable on a large scale [7]. Even the best AI+HTE systems still need human chemists for three reasons:

1. Out-of-Operating-Envelope (OOO) ideas

Robots thrive inside calibrated windows — that is, environments with well-explored and well-defined parameters (temperatures, concentrations, ingredient types, etc.). Breakthrough science often starts outside them. Novel synthesis procedures, especially those involving conditions such as novel precursors and extreme pH, first require a chemist’s hands-on proof of concept before the procedure is suitable for HTE.

2. Atomic-precision surfaces and defect chemistry

Many breakthrough materials—like catalysts, battery interfaces, or quantum devices—depend on subtle, atomic-scale features: a specific crystal facet, a missing atom, a precise dopant gradient. These effects can make or break performance, but achieving them requires extreme control. Techniques like atomic-layer deposition (ALD) and molecular-beam epitaxy (MBE) can sculpt matter at this scale—but they remain slow, delicate, and largely incompatible with HTE. For now, they are still the domain of skilled specialists, not robots.

3. Exception handling & creative leaps

Robots execute playbooks; humans rewrite them. When an unexpected color shift, errant Powder X-ray Diffraction peak, or outlier activity appears, a chemist spots the clue, reformulates, or flips to a new material class.

So, for the coming years, we believe in a hybrid playbook: AI models and robots handle the breadth—screening thousands of formulations, running systematic DOE (Design of Experiments) grids, and feeding automated characterization streams. Scientists handle the depth—probing edge cases, uncovering mechanisms, and making the conceptual leaps that turn a good material into a market-ready one.

At Entalpic, our goal is not a sci-fi “dark lab” but a super-accelerated pipeline where a generative AI engine explores the material space at scale, feeds promising candidates to chemists & HTE pipelines— all reinforcing one another to deliver next-generation catalysts, batteries, and alloys fast enough to matter for climate and industry.

Learn more

If you are eager to go deeper, explore these sources:

- Baker, M. 1,500 Scientists Lift the Lid on Reproducibility. Nature (2016). 533, 452–454 https://doi.org/10.1038/533452a

- Pereira D.A., et al. Origin and Evolution of High Throughput Screening. Br J Pharmacol (2007): 152(1) 53-61. https://doi.org/10.1038/sj.bjp.0707373

- Haguet, Q., Heuson, E., Paul, S. REALCAT: High-Throughput Screening Platform Dedicated to Biocatalysts Exploration, Screening and Design. Invited Seminar – Sun Yat-sen University (2023).

- LeMaterial, https://lematerial.org

- Deleu, T. How is AI accelerating Materials Discovery—and why are GFlowNets a core ingredient ?. Entalpic (2025) https://aqua-tapir-413535.hostingersite.com/gflownets-ai-materials-discovery/

- Duval, Alexandre, et al. “A Hitchhiker’s Guide to Geometric GNNs for 3D Atomic Systems.” ArXiv.org, (2023) https://doi.org/10.48550/arXiv.2312.07511

- Seifrid, Martin, et al. “Autonomous Chemical Experiments: Challenges and Perspectives on Establishing a Self-driving Lab.” Accounts of Chemical Research 55.17 (2022): 2454-2466. https://doi.org/10.1021/acs.accounts.2c00220

Stay tuned; we’ll continue sharing more about how AI is reshaping materials discovery and how your industry can lead this change.

Written by Samuel Gleason, ML & Chemistry Researcher.

Contact the Entalpic team at contact@entalpic.ai

Written by Samuel Gleason, ML & Chemistry Researcher.

Contact the Entalpic team at contact@entalpic.ai